Fooling automated surveillance cameras- Adversarial patches to attack person detection

An overview of the paper “Fooling automated surveillance cameras: Adversarial patches to attack person detection”. The author proposes an approach to generate adversarial patches to targets with lots of intra-class variety, namely persons. The goal is to generate a patch that is able to successfully hide a person from a person detector. All images and tables in this post are from their paper.

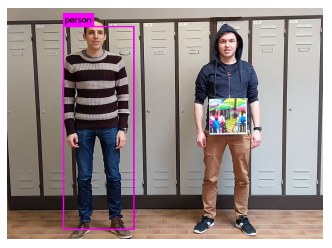

An adversarial patch that is successfully able to hide persons.

Generating adversarial patches against person detectors

The authors suggest an optimisation process (on the image pixels) where they try to find a patch that, on a large dataset, effectively lowers the accuracy of person detection. The loss of this process can be broken down into three parts:

- Non-printability score:- This factor represents how well the colours in our patch can be represented by a common printer.

where is a pixel in of our patch

and

is a colour in a set of printable colours

. The loss favours colors in our image that lie closely to colours in our set of printable colours.

- Total variation score:- This loss makes sure that our optimiser favours an image with smooth colour transitions and prevents noisy images. The score is low if neighbouring pixels are similar, and high if neighbouring pixel are different.

- Objectness score:- This loss is to minimize the object or class score outputted by the detector. The goal is to fool the classifier. Hence, the loss is defined as:

We then use a simple weighted sum to calculate the total loss. These weights are trained using Adam Optimizer.

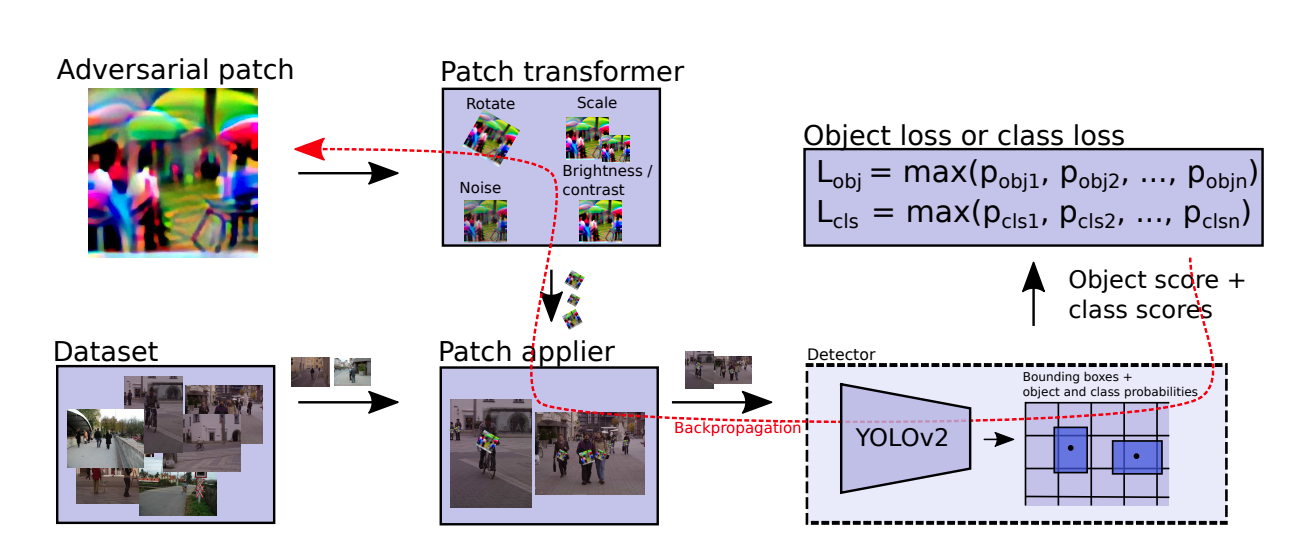

Methodology

We need to first run the target person detector over our dataset of images. This yields bounding boxes that show where people occur in the image according to the detector. On a fixed position relative to these bounding boxes, we then apply the current version of our patch to the image under different transformations (which are explained in Section 3.3). The resulting image is then fed (in a batch together with other images) into the detector. We measure the score of the persons that are still detected, which we use to calculate a loss function. Using back propagation over the entire network, the optimiser then changes the pixels in the patch further in order to fool the detector even more.

Overview of the pipeline to get the object loss.

The authors show that, if we combine this technique with a sophisticated clothing simulation, we can design a T-shirt print that can make a person virtually invisible for automatic surveillance cameras (using the YOLO detector).