PolyLoss-A polynomial expansion perspective of classification loss functions

An overview of the paper “PolyLoss-A polynomial expansion perspective of classification loss functions”. Cross Entropy loss and focal loss are the most common choices when training deep neural networks for classification problems. Generally speaking however, a good loss function can take on much more flexible forms and should be tailored for different tasks and datasets. A simple addition of the linear term brings significant improvement on majority tasks as shown in Figure 1. All images and tables in this post are from their paper.

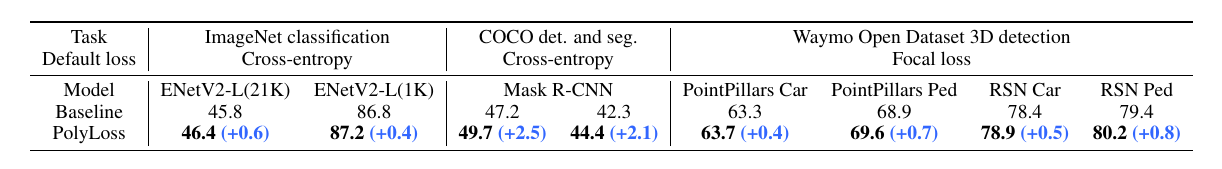

PolyLoss outperforms cross-entropy and focal loss on various models and tasks.

Unlike prior works, this paper claims to procide a unified framework for systematically designing a better classification loss function. The PolyLoss functions serves two purposes:

- Loss for class imbalance

- Robust loss to label noise

- Learned loss functions

PolyLoss

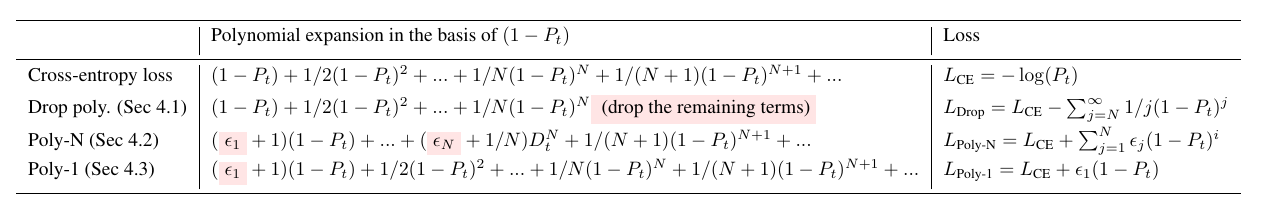

The very intuition of the paper is simple and straightforward. They experiment with Table 1.

Comparing different losses in the PolyLoss framework.

The final formulation they advocate is Poly-1 which only requires tuning one hyperparameter. More importantly, their work highlights the limitation of common loss functions, and simple modification could lead to improvements even on well established state-of-the-art models. These findings encourage exploring and rethinking the loss function design beyond the commonly used cross-entropy and focal loss, as well as the simplest Poly-1 loss proposed in this work.