The Multi-Armed Bandit Problem and Its Solutions

An overview of the topic “The Multi-Armed Bandit Problem and Its Solutions”.

The exploration vs exploitation dilemma exists in many aspects of our life. Say, your favorite restaurant is right around the corner. If you go there every day, you would be confident of what you will get, but miss the chances of discovering an even better option. If you try new places all the time, very likely you are gonna have to eat unpleasant food from time to time.

If we have learned all the information about the environment, we are able to find the best strategy by even just simulating brute-force, let alone many other smart approaches. The dilemma comes from the incomplete information: we need to gather enough information to make best overall decisions while keeping the risk under control. With exploitation, we take advantage of the best option we know. With exploration, we take some risk to collect information about unknown options. The best long-term strategy may involve short-term sacrifices. For example, one exploration trial could be a total failure, but it warns us of not taking that action too often in the future.

Background

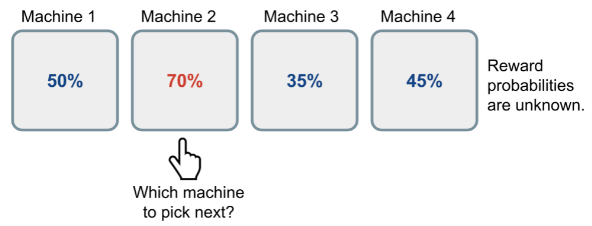

The multi-armed bandit problem is a clssic problem that demonstrates the exploration vs exploitation dilemma well. Imagine you are in a casino facing multiple slot machines and each is configured with an unknown probability of how likely you can get a reward. The question is: What is the best strategy to achieve the highest long-term rewards?

In the blog, the author only covered the case of finite number of trials, and mentioned that this scenario offers a new type of exploration problem. For instance, if the number of trials is smaller than the number of slot machines, we cannot even try every machine to estimate the reward probability and hence we have to behave smartly w.r.t. a limited set of knowledge and resources (i.e. time).

An illustration of how a Bernoulli multi-armed bandit works. The reward probabilities are unknown to the player.

A naive approach can be to continue playing on the machine for many rounds so as to eventually estimate the “true” reward probability. However, this is quite wasteful and does not guarantee the best long-term rewards regardless.

Definitions

A Bernoulli multi-armed bandit can be described as a typle of , where:

- We have

machines with reward probabilities,

.

- At each time step

, we take an action

on one slot machine and receive a reward

.

is a set of actions, each referring to the interaction with one slot machine. The value of action

is the expected reward,

. If action

at the time step

is on the i-th machine, then

.

is a reward function. In case of Bernoulli bandit, we observe reward

in a stochastic fashion. At time step

,

may return reward 1 with a probability

or 0 otherwise.

It is a simplified version of Markov decision, as there is no state .

The goal is to maximize the cumulative reward

. If we know the optimal action with the best reward, then the goal is same as to minimize the potential regret or loss by not picking the optimal action.

The optimal reward probability

of the optimal action

is:

Our loss function is the total regret we might have by not selecting the optimal selecting the optimal action up to the time step :

Based on how we do exploration, there are several ways to solve the multi-armed bandit problem:

- No exploration: the most naive approach and a bad one.

- Exploration at random

- Exploration smartly with a preference to uncertainty.

-Greedy Algorithm

-Greedy Algorithm

The -greedy algorithm takes the best action most of the time, but does random exploration occasionally. The action value estimated according to the past experience by averaging the rewards associated with the target action

that we have observed so far:

where is a binary indicator function and

is how many times the action

has been selected so far,

.

According to the -greedy algorithm, with a small probability

we take a random action, but otherwise (which should be most of the time), we pick the best action that we have learnt so far.

Upper Confidence Bounds

Random exploration gives us an opportunity to try out options that we have not known much about. However, due to the randomness, it is possible we end up exploring a bad action which we have confirmed in the past (bad luck!). To avoid such inefficient exploration, one approach is to decrease the parameter ε in time and the other is to be optimistic about options with high uncertainty and thus to prefer actions for which we haven’t had a confident value estimation yet. Or in other words, we favor exploration of actions with a strong potential to have a optimal value.

The Upper Confidence Bounds (UCB) algorithm measures this potential by an upper confidence bound of the reward value, , so that the true value is below with bound

with high probability. The upper bound

is a function of

; a larger number of trials

should give us a smaller bound

.

In UCB algorithm, we always select the greediest action to maximize the upper confidence bound:

Hoeffding’s Inequality

If we do not want to assign any prior knowledge on how the distribution looks like, we can get help from “Hoeffding’s Inequality” - a theorem applicable to any bounded distribution.

Let be the i.i.d. random random variables and they are all bounded by the interval

. The sample mean is

. Then for

, we have:

Given one target action , let us consider:

as the random variables,

as the true mean,

as the sample mean,

- And

as the upper confidence bound,

Then we have, .

We want to pick a bound so that with high chances the true mean is below the sample mean + the upper confidence bound. Thus, should be a small probability. Thus, we set:

UCB1

One heuristic is to reduce the threshold in time, as we want to make more confident bound estimation with more rewards observed. Setting

, we get UCB1 algorithm:

and,

Bayesian UCB

In UCB or UCB1 algorithm, we do not make any prior on the reward distribution and therefore we have to rely on the Hoeffding’s Inequality for a very generalized estimation. If we are able to know the distribution upfront, we would be able to make a better bound estimation.

For instance, if we expect the mean reward of every slot machine to be a Gaussian, we can set the upper bound as 95\% confidence interval by setting to be twice the standard deviation.

Thompson Sampling

At each time step, we want to select action according to the probability that

is optimal:

where is the probability of taking action

given history

.

For the Bernoulli bandit, it is natural to assume that follows a Beta distribution, as

is essentially the success probability

in Bernoulli distribution. The value of Beta(

) is within the interval

;

and

correspond to the counts when we succeeded or failed to get a reward respectively.

Initially, we set the Beta parameters based on some prior knowledge or belief. For instance,

and

; we expect the reward probability to be around 50\% but are not very confident.

and

; we strongly believe that the reward probability is 10\%.

At each time , we sample an expected reward,

from the prior distribution

for every action. The best action is selected among samplers. After the true reward is observed, we can update the Beta distribution accordingly, which is essentially doing Bayesian inference to compute posterior with the known prior and the likelihood of getting the sampled data.

\

Thompson sampling implements the idea of probability matching. Because its reward estimations are sampled from posterior distributions, each of these probabilities is equivalent to the probability that the corresponding action is optimal, conditioned on observed history.

Conclusion

We need exploration because information is valuable. In terms of the exploration strategies, we cannot do exploration at all, focusing on the short-term returns. Or we occasionally explore at random. Or even further, we explore and we are picky about which options to explore — actions with higher uncertainty are favored because they can provide higher information gain.

Summary of algorithms discussed.